Offsite NAS Backup to AWS S3 Glacier

2024-10-03

11 min read

Icon Licensing: NAS & money icons created by Freepik - Flaticon

Spoiler alert: This post is NOT sponsored by AWS.

I've set up my NAS in the previous 2 posts (part 1 and part 2), but I was missing a huge part: backup. In this post, I'll share how I made periodic backups to AWS S3 Glacier.

Why Backup? Why S3?

As I've been told numerous times, RAID is not a backup. There are many ways I can screw my data even if the redundancy is there. A non-exhaustive list off the top of my head:

- The disks could fail.

- I could accidentally delete an entire dataset or an important folder (but snapshots can help).

- Ransomware could encrypt all my files.

- (Extreme case, but) fire or earthquake destroys my house.

Hence, I still need an offsite backup. Currently the critical data I own are around 400 GB, mostly photos and service data folders. I've got two options:

- Build a second NAS and send to my friend's house. Cost analysis: cheapest option is to buying a used HP Elitedesk for ~$60 and adding drives (2x HDD & 1x SSD). It also burns electricity.

- Surrender to capitalism and use BackBlaze B2 or AWS S3 Glacier instead.

Let's take a closer look at the cost of the first choice. Currently, the most affordable hard drives are WD 10 TB entriprise drives at ~$110 each (well, you can buy 1 TB consumer drives at $40 but why?). That means I'll spend at least $320 on this machine, and I also need to pay the electricity bill. Since I only have 400 GB of data to backup, it hardly justifies the price. On the other hand, Backblaze B2 offers a $5/TB flat pricing and AWS S3 Glacier Deep Archive, which stores data on tapes, only costs $1.014 per TB†. That sounds much more attractive until I accumulate a few TBs of data.

†: Of course there's a long footnote to the S3 Glacier pricing. Allow me to discuss later.

Between S3 Glacier and Backblaze, I chose S3 because I want to keep multiple full copies of data, and the storage cost of B2 rockets quickly if I want to store, say, 6 monthly full backups. At $5*6*0.4=$12 per month, it's more reasonable to go with option 1. So, S3 Glacier.

What's the catch?

Before I go over the steps of backing up to S3, let me warn you of some pricing nuances few have mentioned elsewhere. On top of the $1.014/TB storage cost, there are some other costs of using Glacier:

- S3 Glacier Deep Archive charges $0.005 per 1,000

UploadPartrequests. Per my guess, the default chunk size of an upload ismax(8MB, total size / 10000)(they mentioned the default 8 MB size but what about files larger than 80 GB?), because an upload can only contain 10,000 parts max. Say I'm uploading a 300 GB file, theaws s3 cpcommand will generate 10,000 requests for this upload even if it can just do it with 75 chunks of 4 GB each. Which means $0.05 for every single file larger than 80 GB. The result is 32,000 requests for 7 days of backing up when it could make it under 1,000. - The real pain is when you abort uploading a large full backup. I had this happen because there was a mistake in my script and it attempted to upload a second full copy when it should do a minimal incremental backup. I aborted the upload, and found I was charged $0.64 four days since I registered the AWS account. For comparison, the expected cost for an entire month is $0.4. I dug the bill and found this line:

Amazon Simple Storage Service TimedStorage-GDA-Staging

$0.021 per GB-Month of storage used in GlacierStagingStorage

27.029 GB-Mo

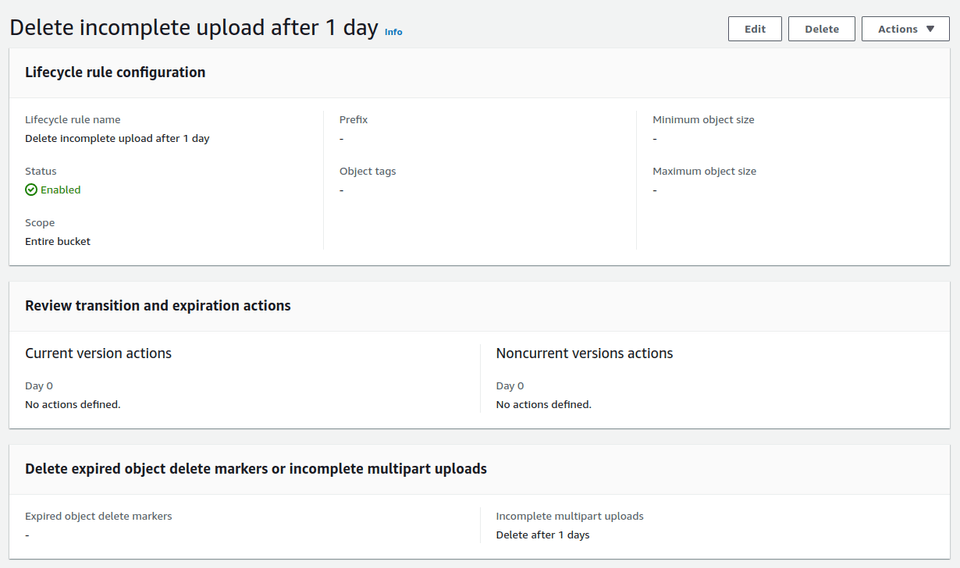

USD 0.57Cool, S3 thinks the upload hasn't aborted and charges me of the unfinished 202 GB upload with the fucking price of S3 standard!!! I wouldn't even notice the ridiculous surcharge if I didn't look at the cost center accidentally. The correct way to handle it is to add an object lifecycle rule that deletes incomplete upload after 1 day, but it's rarely mentioned in any homelab backup blogs.

- S3 Glacier Deep Archive keeps objects for at least 180 days, so there'll be at least 6 monthly full backups at any time. That brings my monthly cost to $2.4.

- Also don't forget the $95/TB egress fee when you need to access the backup! But let's hope my house doesn't burn down anytime soon and live with that fact for the moment.

You have been warned.

Technical Steps

sudo aws configure set default.s3.multipart_chunksize 4GB

sudo aws configure set default.s3.multipart_threshold 4GBConfigure AWS Account

Now, let's get our hands dirty. As a start, we need to create an AWS account. I'll skip this part and assume you have a fresh account ready.

Choose a region that's far away from your home. I'm on west coast so I chose us-east-1 (N. Virginia).

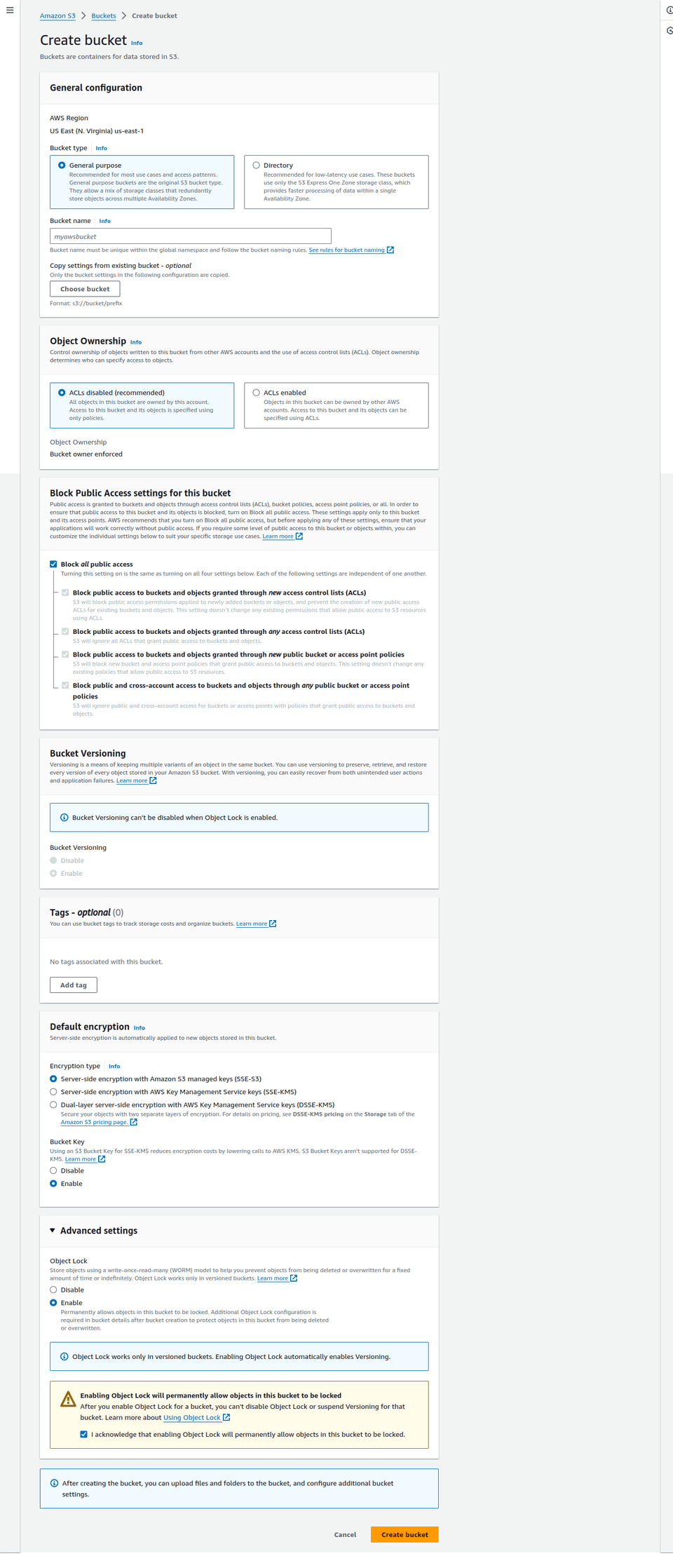

In the AWS console, go to S3 and create a new bucket. The bucket name must be globally unique (i.e. unique across all accounts). The bucket needs to have the following properties:

- Block all public access. This enforces all the objects in the bucket to be private so I won't mistakenly expose some of the files.

- Enable bucket versioning and object lock. Reason is I need to make sure no one can delete the objects by any means without a very high-privileged account. Since S3 Glacier Deep Archive charges at least 180 days of storage cost for any object, I'm gonna set it to 180 days later and only delete the expired objects.

- Note: I'm not actually using versioning. It's just to enable object lock.

- Leave anything else to the defaults, meaning ACL disabled, default encryption, and bucket key enabled.

Confirm and create the bucket.

Now go to the property panel of our newly created bucket. We need to configure the 180-day locking policy. Edit the Object Lock section to the following:

- Object lock: Enabled

- Default retention: Enabled

- Default retention mode: Governance (you don't want to use compliance and pay permanently for an object)

- Default retention period: 180 days

IMPORTANT: Go to Management panel, and create a lifecycle rule under Lifecycle rules section. This rule will remove all incomplete multipart uploads in the bucket after 1 day, so it won't cost you a fortune as AWS did to me.

- Rule scope: Apply to all objects in the bucket

- Lifecycle rule actions: Delete expired object delete markers or incomplete multipart uploads

- Delete expired object delete markers or incomplete multipart uploads -> Incomplete multipart uploads: check

Delete incomplete multipart uploadsand setnumber of daysto 1

Well, I should have created another lifecycle rule to delete all objects after 180 days, but I left that as homework to the future me.

We're done with the bucket. Now, let's create a IAM user with just enough permission to upload backup to the bucket.

Go to IAM->Users and create a new user. Disable console access as we only use this user in a script. Add a new custom permission with the following content:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::YOUR_BUCKET_NAME/*",

"arn:aws:s3:::YOUR_BUCKET_NAME"

]

}

]

}Go ahead and create the user. Create an access key if one is not generated already, and keep note of it. We're done with the AWS account (although you'll constantly go back in paranoia to check how much you've been charged).

Configure NAS Backup

On my NAS, I first installed the AWS CLI tool and configured it. The awscli package in Debian repo is a bit outdated, but it's enough in our use case.

sudo apt install awscli

aws configure # Enter region and your IAM user access keyWe're almost done. The only thing left is a backup script. The script would execute the following for each ZFS dataset we want to backup:

- Take a monthly full snapshot if none exists for the current month (we can configure it to once per two months to half the storage cost)

- Take a daily incremental snapshot if the current monthly snapshot exists

- Use

zfs sendto convert the snapshot into a file and upload to S3 Glacier - Delete old snapshots (snapshots created before the latest full backup) from the NAS

Here's the script I created for this purpose:

#!/bin/bash

# Configuration

DATASETS=("mypool/backup" "mypool/immich" "mypool/photos") # Datasets we want to back up

DEST_DIR="/mypool/snapshots" # Destination folder. Remember to turn off auto snapshots of this folder, otherwise your disk space will vanish quickly

SNAPSHOT_PREFIX="s3backup"

get_current_date() {

date "+%Y-%m-%d"

}

get_current_month() {

date "+%Y-%m"

}

create_full_snapshot() {

local DATASET="$1"

local SNAPSHOT_NAME="${DATASET}@${SNAPSHOT_PREFIX}-full-$(get_current_date)"

echo "Creating full snapshot: $SNAPSHOT_NAME"

zfs snapshot "$SNAPSHOT_NAME"

send_snapshot "$SNAPSHOT_NAME"

}

create_incremental_snapshot() {

local DATASET="$1"

local LAST_SNAPSHOT=$(zfs list -t snapshot -o name -s creation -d 1 "${DATASET}" | grep "@${SNAPSHOT_PREFIX}-" | tail -1)

local SNAPSHOT_NAME="${DATASET}@${SNAPSHOT_PREFIX}-incremental-$(get_current_date)"

echo "Creating incremental snapshot: $SNAPSHOT_NAME based on $LAST_SNAPSHOT"

zfs snapshot "$SNAPSHOT_NAME"

send_snapshot "$LAST_SNAPSHOT" "$SNAPSHOT_NAME"

}

send_snapshot() {

local SOURCE_SNAPSHOT=$1

local TARGET_SNAPSHOT=$2

if [ -z "$TARGET_SNAPSHOT" ]; then

# Full backup, no incremental source

local FILENAME="$(echo "${SOURCE_SNAPSHOT}" | sed 's/\//-/g')-full.zfs"

local FULL_FILENAME="${DEST_DIR}/${FILENAME}"

echo "Sending full snapshot: $SOURCE_SNAPSHOT to $FULL_FILENAME"

zfs send "$SOURCE_SNAPSHOT" > "$FULL_FILENAME"

else

# Incremental backup

local FILENAME="$(echo "${SOURCE_SNAPSHOT}" | sed 's/\//-/g')-inc-$(echo "${TARGET_SNAPSHOT}" | sed 's/\//-/g').zfs"

local FULL_FILENAME="${DEST_DIR}/${FILENAME}"

echo "Sending incremental snapshot: $SOURCE_SNAPSHOT -> $TARGET_SNAPSHOT to $FULL_FILENAME"

zfs send -i "$SOURCE_SNAPSHOT" "$TARGET_SNAPSHOT" > "$FULL_FILENAME"

fi

echo "Uploading snapshot $FILENAME to S3 bucket"

aws s3 cp "$FULL_FILENAME" "s3://YOUR_BUCKET_NAME/${FILENAME}" --storage-class DEEP_ARCHIVE

}

cleanup_old_snapshots() {

local DATASET="$1"

echo "Cleaning up old snapshots for dataset: $DATASET..."

# Find the latest full snapshot

local LATEST_FULL_SNAPSHOT=$(zfs list -t snapshot -o name -s creation -d 1 "${DATASET}" | grep "@${SNAPSHOT_PREFIX}-full-" | tail -1)

if [ -z "$LATEST_FULL_SNAPSHOT" ]; then

echo "No full snapshots found for $DATASET. Skipping cleanup."

return

fi

local LATEST_FULL_DATE=$(echo "$LATEST_FULL_SNAPSHOT" | grep -oP "\d{4}-\d{2}-\d{2}")

# Delete snapshots older than the latest full snapshot, created by this script

zfs list -t snapshot -o name -s creation -d 1 "${DATASET}" | grep "@${SNAPSHOT_PREFIX}-" | while read -r SNAPSHOT; do

SNAPSHOT_DATE=$(echo "$SNAPSHOT" | grep -oP "\d{4}-\d{2}-\d{2}")

if [[ "$SNAPSHOT_DATE" < "$LATEST_FULL_DATE" ]]; then

echo "Deleting old snapshot: $SNAPSHOT"

zfs destroy "$SNAPSHOT"

fi

done

}

for DATASET in "${DATASETS[@]}"; do

echo "Processing dataset: $DATASET"

# Check if a full snapshot exists for the current month

CURRENT_MONTH=$(get_current_month)

FULL_SNAPSHOT_EXISTS=$(zfs list -t snapshot -o name -d 1 "$DATASET" | grep "${DATASET}@${SNAPSHOT_PREFIX}-full-${CURRENT_MONTH}")

if [ -z "$FULL_SNAPSHOT_EXISTS" ]; then

# Create a full snapshot if not exists for the current month

create_full_snapshot "$DATASET"

else

# Create an incremental snapshot if a full snapshot already exists

create_incremental_snapshot "$DATASET"

fi

cleanup_old_snapshots "$DATASET"

done

rm -rf "$DEST_DIR"/* # Delete snapshot files as they have been uploaded

Two things to clarify:

- I stored the ZFS stream into files, uncompressed and unencrypted. For the compression part, I found the compression is totally useless since the files I back up are usually highly compressed files like photos and videos, so double compressing is merely wasting CPU cycles. But it's a bad practice not to encrypt the files before uploading anywhere. I should add encryption later.

- Be sure to set the default chunk size of Glacier uploads!

Cool, we're basically all set. Now, just set the script to run periodically and we're done.

sudo chown root:root /path/to/the/script.sh

sudo chmod 700 /path/to/the/script.sh

sudo crontab -e # Inside crontab editor, add a daily job running the script.Result

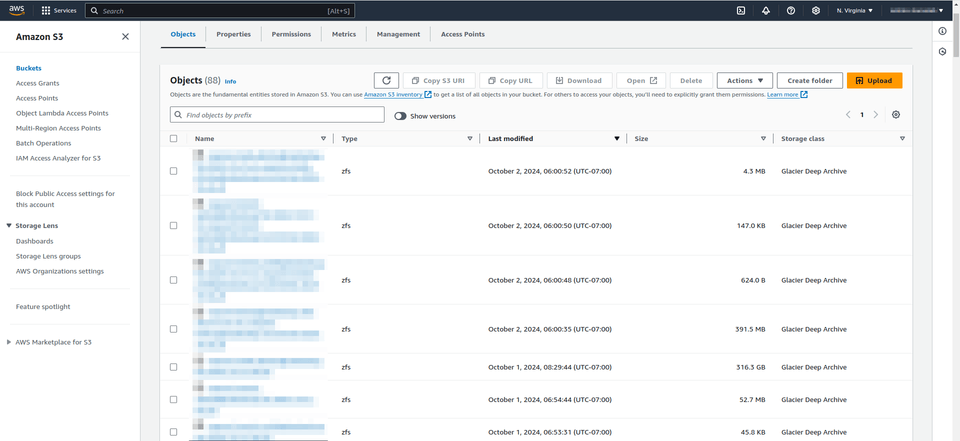

Here's how it looks in my S3 dashboard. Looks good, right?

Improvements

There're two concerns to my current approach, aside from the cost of recovery of S3 Glacier:

- The unencrypted uploads. I'll definitely fix it in the future.

- **I didn't verify (and can't verify) if those backups actually work. Maybe the files sitting in the bucket are all broken, but I have no way to know without downloading them at $95/TB and perform a dry-run recovery. That's one of the huge drawbacks of backing up to S3 Glacier. I don't think I can ever solve the problem until I eventually switch to an offsite backup NAS.